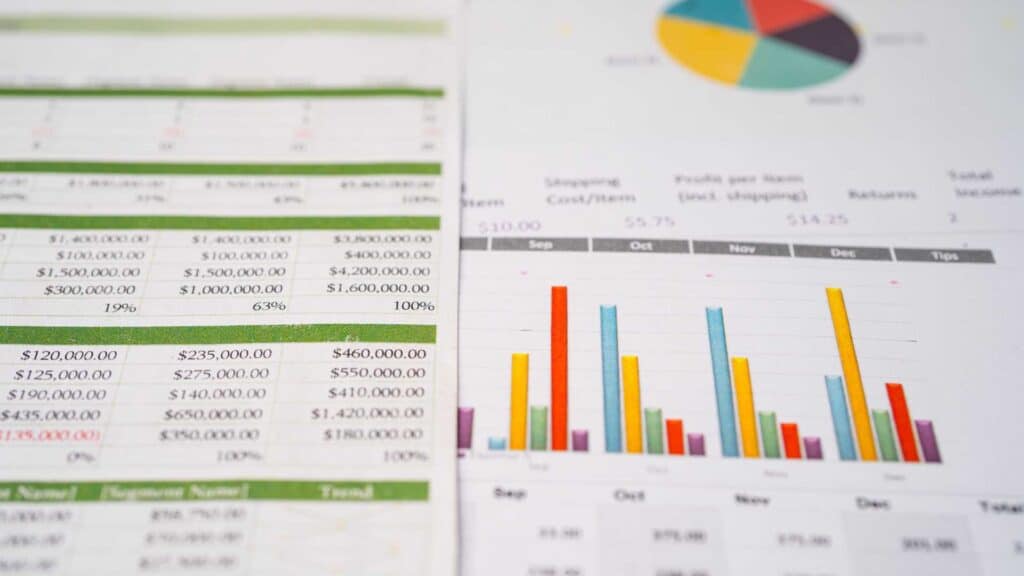

Poor data quality generates an average of $12.9 million in annual organizational costs. That figure signals a deeper data trust deficit that undercuts forecasts, slows growth and weakens leadership’s confidence in the numbers. Many RevOps teams respond with periodic CRM cleanups, an approach that never catches up.

The problem is not your data. Fragmented go-to-market processes create the failure.

Instead of offering another tactical checklist, this article breaks down how siloed tools and reactive fixes set teams up to fail and introduces a systematic framework that builds a trustworthy foundation for accurate forecasting, fair compensation and reliable AI-driven growth.

The Real Cost of Bad Data: A Breakdown of GTM Failure

The data trust deficit reaches far beyond inaccurate reports. It disrupts core go-to-market functions and drives inefficiency that hits revenue. Each flawed record compounds the problem.

- Stale opportunity data makes AI-driven forecasts unreliable, which leaves planning based on low-confidence signals.

- Inaccurate territory or account data produces unbalanced patches and missed quotas, which demoralizes top performers.

- Duplicate or unassigned records trigger commission disputes that erode the sales team’s trust in their compensation.

- Flawed foundational data sets expensive AI initiatives up to fail before they start.

Poor data quality belongs on the revenue agenda, not just IT’s, because it hits sales performance, compensation and strategic investments.

Why Traditional Data Hygiene Fails

Many RevOps leaders double down on cleanup cycles and enrichment tools. That playbook misses the mark because it reacts to decay after the fact, a losing race when reports estimate 70.3% of data becomes outdated every year.

Tool sprawl makes this worse. When teams use disconnected systems for planning, enrichment and validation, they create conflicting records and process friction. Without one unified system, data standards depend on manual compliance and periodic cleanup projects that are expensive, temporary and unsustainable. The core issue is a lack of enforcement through policy-driven automation.

Reactive cleanup traps teams in a perpetual, resource-draining cycle because it treats the symptom, not the cause of systemic data decay.

The Systematic Solution: A Framework for End-to-End Data Integrity

Top RevOps teams understand a simple truth: data hygiene follows from a unified GTM process, not a separate task. Instead of cleaning up messes, they build a system where data integrity becomes the default. The framework rests on three pillars that connect planning to performance.

Pillar 1: Run on One Shared Dataset

A unified platform creates the conditions for data integrity. By centralizing planning, execution and performance data, organizations remove silos that create conflicting information. For example, by consolidating planning and execution into one shared dataset for its go-to-market model, Udemy reduced planning time by 80 percent, ensuring all teams operated from the same reliable data.

Pillar 2: Automate Data Governance with Policies

Lasting data integrity cannot depend on manual effort. Leaders should Automate Data Governance by implementing rules for data capture, validation and deduplication before bad data enters the CRM. This proactive, policy-driven model applies standards automatically, which preserves consistency and accuracy at scale.

Pillar 3: Integrate Planning and Performance

When planning and performance connect, data quality improves continuously. Clean inputs from territory and quota design flow into performance analytics, which gives leaders trustworthy insights for coaching and forecasting. This integration ensures that strategic plans are built on and measured by reliable data.

Teams achieve true data integrity when a unified GTM system enforces rules from planning through execution.

How AI Magnifies Data Hygiene Problems

Artificial intelligence amplifies what you feed it. With clean, structured data, it uncovers useful insights and improves efficiency. With messy, incomplete and conflicting data sitting in most CRMs, it simply amplifies the chaos, which leads to flawed recommendations and confident but incorrect predictions.

Leaders across the industry echo this point. On an episode of The Go-to-Market Podcast, host Amy Cook discussed this challenge with Adam Cornwell, who noted, “AI can work, but if you don’t have the data foundation that’s set up properly for AI, you can’t just lay AI on top of crappy data because the AI can be crappy. And so garbage in, garbage out.”

Investing in AI before fixing your data foundation does not produce intelligent insights; it produces faster, more confident bad decisions.

“Fix the data foundation first or AI will confidently steer you wrong.”

From Fragmented Data to a Unified Revenue Command Center

Solving deep-rooted data hygiene issues is not about buying another enrichment tool. It requires a unified platform where clean data becomes the operating standard. The challenge is widespread; nearly half, 47 percent, of RevOps professionals say their data quality is poor. A fragmented approach cannot solve a systemic problem.

A unified revenue command center lets teams plan confidently, perform well and pay accurately because every stage of the revenue lifecycle stays connected. The Fullcast 2025 Benchmarks Report found that logo acquisitions are 8x more efficient with ICP-fit accounts, a result only possible when clean, accurate data drives the GTM plan. With a platform like Fullcast Revenue Intelligence, leaders can diagnose every deal and territory using reliable data, not gut feel.

The solution to systemic data issues is not another point solution for cleanup but a unified platform that makes data integrity the default operational standard.

Stop Cleaning Data and Start Building Your Revenue Engine

Endless CRM cleanups and enrichment sprints signal a deeper issue. Persistent data hygiene problems are not the root cause of your revenue challenges; they stem from a fragmented, disjointed go-to-market process.

A trustworthy revenue operation does not come from more cleanup tools or manual effort. It takes a strategic shift. Instead of treating data as a separate problem, leading RevOps teams adopt a unified platform that enforces data integrity by design.

Ready to move from reactive repair to proactively building your revenue engine on a foundation of trust? See how Fullcast helps improve forecast accuracy and quota attainment by addressing data issues at their source, so reliable data becomes the default, not the exception.

FAQ

1. Why is poor data quality actually a go-to-market problem and not just a data problem?

Poor data quality is a symptom of fragmented go-to-market processes, not the root cause. When sales, marketing, and revenue operations work in disconnected systems without unified workflows, data naturally becomes inconsistent and unreliable, creating a trust deficit that undermines forecasting and strategic decision-making.

2. Why doesn’t cleaning up CRM data solve the data quality problem long-term?

Reactive data cleanup treats the symptom rather than the root cause. Data decays continuously as contacts change jobs, companies evolve, and market conditions shift, which means manual cleanup becomes a perpetual, resource-draining cycle that never addresses why the data degrades in the first place.

3. How does poor quality CRM data affect AI systems and revenue predictions?

AI amplifies the quality of the data it is trained on. When you layer AI on top of messy, incomplete CRM data, it produces unreliable forecasts and flawed recommendations. The AI operates confidently on flawed inputs, leading teams to make strategic decisions based on predictions that don’t reflect actual market conditions. This essentially automates bad decisions and makes them faster.

4. How does fragmented go-to-market infrastructure cause data quality issues?

When go-to-market teams use separate systems for planning, execution, and measurement, data gets duplicated, modified, and becomes inconsistent across platforms. This fragmentation prevents a single source of truth from existing and makes it impossible to maintain data integrity without constant manual intervention.

5. What does a unified GTM system do differently to maintain data quality?

A unified GTM system makes clean data the default by enforcing governance rules automatically from planning through execution. Instead of relying on manual cleanup, it creates a single source of truth where data integrity is built into the workflow itself.

6. Why do RevOps teams struggle with data quality despite having cleanup tools?

Cleanup tools address data quality reactively after problems have already occurred. Without fixing the underlying fragmented processes that cause data decay, these tools only provide temporary relief while teams remain trapped in an endless cycle of identifying and correcting data issues.

7. What role does data quality play in targeting the right accounts?

Clean, accurate data is essential for identifying and prioritizing accounts that match your ideal customer profile. Without reliable firmographic and behavioral data, go-to-market teams waste resources pursuing poorly-fit prospects instead of focusing efforts where they’ll generate the highest returns.

8. How does integrating planning with execution improve data integrity?

When planning and execution exist in the same unified system, the data used to build strategies automatically flows into operational workflows. This integration eliminates the manual handoffs and duplicate entries that typically introduce errors and inconsistencies.

9. What’s the fundamental shift needed to solve data quality problems permanently?

The shift is from treating data quality as a cleanup problem to recognizing it as a systems architecture challenge. True data integrity comes from building unified GTM infrastructure where clean data is an automatic outcome of properly designed workflows, not something achieved through constant manual maintenance.